Notes on Apache NiFi logging

Russell Bateman

October 2025

last update:

|

Notes on Apache NiFi loggingRussell Bateman |

NiFi uses the logback library to handle logging. The files are written to the base installation ${NIFI_HOME}/logs, but logging is configured using file logback.xml in the NiFi conf directory.

There are two logging processors that can be used in the flow (as expressed on the NiFi UI canvas).

Sample output from nifi-app.log:

2025-10-09 11:15:32,456 INFO [Timer-Driven Process Thread-2] o.a.n.p.s.LogAttribute[id=7933b98c-016c-1000-0000-0000301a206a] \

FlowFile Attributes: {filename=file.txt, path=./, uuid=64b73b5a-73d8-4f59-9971-8608e5e8c17b}

In custom processors (as in standard and most other NiFi processors), ComponentLog is an interface for logging messages to logs/nifi-app.log and that can be displayed in the NiFi UI including helpful context such as the processor's unique identifier which appears in the Configure Processor dialog in the Settings tab as id (as shown above in the same output).

Custom processors are encouraged to perform their logging via ComponentLog, rather than obtaining a direct instance of a third-party logger. This is because logging via ComponentLog allows the framework to render log messages that exceed a configurable severity level to NiFi's User Interface, allowing those who monitor the dataflow from the UI to be notified when important events occur. Additionally, it provides a consistent logging format for all processors by logging stack traces when in DEBUG mode and providing the processor’s unique identifier (as noted above) in log messages.

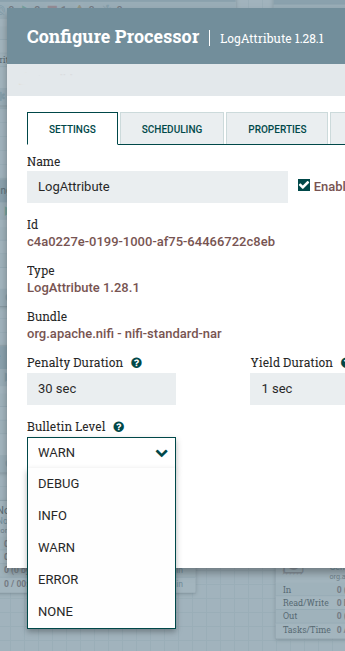

In the Settings tag, there is an item, Bulletin Level, for setting the logging level including...

Note the absence of TRACE of log4j and Java native java.util.logging levels. The default level is WARN.

import org.apache.nifi.logging.ComponentLog;

import org.apache.nifi.processor.AbstractProcessor;

import org.apache.nifi.processor.ProcessContext;

import org.apache.nifi.processor.ProcessSession;

public class ClaimsItemizer extends AbstractProcessor

{

@Override public void onTrigger( ProcessContext context, ProcessSession session ) throws ProcessException

{

final ComponentLog logger = getLogger();

...

String message = "This message will be logged."

logger.debug( message );

logger.info( message );

logger.warn( message );

logger.error( message );

logger.trace( message ); // message will never be logged

...

}

}

Though TRACE is a logback option, ComponentLog and the NiFi UI don't know anything about it.

While ComponentLog exposes a trace() method, i.e.: you can call it, that is, you can still use TRACE level through the underlying slf4j logger if you access it directly. But, there is no way to "awaken" it by configuration in any processor. Formally, NiFi documentation's recommendation is to stick with ComponentLog and the levels noted above.

Logfiles are usually located on the path ${NIFI_HOME}/logs.

| Filename | Purpose |

|---|---|

| nifi-bootstrap.log | Records messages related to the NiFi bootstrap process, including startup and shutdown events, and any issues encountered during the initial loading of the NiFi application. Look here to diagnose start-up issues with your instance of Apache NiFi. |

| nifi-app.log | This is the main application log file. It contains general framework messages, component-specific messages and various events related to the NiFi application's runtime. Look here to diagnose flow issues with what's on the UI canvas of Apache NiFi. |

| nifi-user.log | Contains authentication and authorization messages, especially when NiFi is secured (set up to require authentication). |

| nifi-request.log | Tracks HTTP requests made to the NiFi user interface and ReST API, providing a record of user interactions and API calls. |

| nifi-deprecation.log | Captures specific warnings and messages related to the use of deprecated components or features within NiFi. In the Java implementation code, this means use of the @DeprecationNotice annotation. |

NiFi uses a rolling policy for its logs to prevent individual files from growing too large. This policy, configured in logback.xml, creates new log files based on a set time interval or when a file exceeds a certain size.

For example, the default configuration for nifi-app.log rolls the log every hour or when it reaches 100MB, retaining up to 30 files of history.

NiFi provides processors that allow for the generation of custom messages, such as LogMessage and LogAttribute. By default, these messages are written to nifi-app.log, but logback.xml supports reconfiguration to force them to a separate file. For enterprise environments, it is a best practice to send logs to some centralized log management system like Splunk, Elasticsearch or a database for easier analysis and alerts.

There is a great deal cut out of this; it's here only as a placeholder and to illustrate some relevant parts of it.

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="30 seconds">

<shutdownHook class="ch.qos.logback.core.hook.DefaultShutdownHook" />

<appender name="APP_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-app.log</file>

</appender>

<appender name="USER_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-user.log</file>

</appender>

<appender name="REQUEST_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-request.log</file>

</appender>

<appender name="BOOTSTRAP_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-bootstrap.log</file>

</appender>

<appender name="DEPRECATION_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-deprecation.log</file>

</appender>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<!-- goes to console if present (for example, debugger console in IntelliJ IDEA

or the console window if NiFi is launched using /opt/nifi/bin/nifi.sh start

-->

</appender>

<!-- valid logging levels: TRACE, DEBUG, INFO, WARN, ERROR -->

<logger name="org.apache.nifi" level="INFO"/>

<logger name="org.apache.nifi.processors" level="WARN"/>

<logger name="org.apache.nifi.processors.standard.LogAttribute" level="INFO"/>

<logger name="org.apache.nifi.processors.standard.LogMessage" level="INFO"/>

<!--

Logger for capturing user events. We do not want to propagate these

log events to the root logger. These messages are only sent to the

user-log appender.

-->

<logger name="org.apache.nifi.web.security" level="INFO" additivity="false">

<appender-ref ref="USER_FILE"/>

</logger>

<logger name="org.apache.nifi.web.api.config" level="INFO" additivity="false">

<appender-ref ref="USER_FILE"/>

</logger>

...

<!-- we might put this here ourselves (user of NiFi) -->

<logger name="com.acme.fhir" level="DEBUG" additivity="false">

<appender-ref ref="CONSOLE" />

<appender-ref ref="APP_FILE" />

</logger>

<root level="INFO">

<appender-ref ref="APP_FILE" />

</root>

</configuration>

In NiFi's case, the ancestor logger is the CONSOLE (root) logger. If you configure a logger such as the following:

<logger name="com.acme.fhir" level="WARN" additivity="false"> <appender-ref ref="CONSOLE" /> </logger>

...you are in essence saying you don't want what gets logged by that logger to reach logs/nifi-app.log.

The root logger is the fundamental logger in logback's hierarchy. From the root logger all other loggers inherit their configuration by default. For example, in Java code...

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Foo

{

private static final Logger logger = LoggerFactory.getLogger( Foo.class );

...

}

...automatically become a child of the root logger and has the following default properties:

It is as if...

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} -%kvp- %msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="CONSOLE" />

</root>

</configuration>

In short...

16:06:09.031 [main] INFO chapters.configuration.Foo -- Entering Foo application. 16:06:09.046 [main] DEBUG chapters.configuration.Foo -- Did it again!

This output is generated by the root logger's console appender (CONSOLE), which is the default configuration that all loggers inherit unless specifically overridden. So, the root logger is in essence the parent of all loggers in your application and provides the default behavior that cascades down to all child loggers.

Once logback.xml is set up, what often becomes most important in analyzing the logged output is the pattern. Here's a short example that purposely conflicts with the default pattern established above:

<pattern>%-4relative [%thread] %-5level %logger{5}.%method:%line - %message%n</pattern>

By component of the pattern above, here's what each does:

%-4relative

[%thread]

%-5level

%logger{5}.%method:%line

- %message

%n

A log entry using this pattern might look like:

1234 [main] DEBUG c.i.p.Foo.bar():42 - Starting test execution

This pattern is particularly useful for debugging and testing scenarios where you need to track execution flow, timing, and precise code locations of log events.

The default one (shown elsewhere on this page, but here's the essence):

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} -%kvp- %msg%n</pattern>

Pattern 1 (this appendix) is better for development/debugging where you need:

Pattern 2 (the default show higher on the page in the logback configuration discussion) is better for production where you need:

The use of ComponentLog in custom processors is essential in maintaining a consistent logging approach and format across NiFi flows. NiFi's standard processors and those of many other contributors follow this.

In production, logs/nifi-app.log isn't typically accessible. Think of NiFi running inside a Docker container and what it would take to get inside to look at this logfile. Or what would it take to view the logfile if NiFi was installed and running on a remote host to which you do not have access?

Instead, reporting tasks and/or other mechanisms may be used to send log messages to external systems such as databases or centralized logging platforms (like Splunk or Elastisearch) for easier analysis and monitoring.

Also, you can enable DEBUGging for a processor (covered elsewhere in these notes), then look at General (hamburger menu) → Bulletin Board can show you.

Note: What's documented here doesn't really work because of how NiFi's logging is set up. I use a) ComponentLog directly in custom processor code, but b) slf4j in lower-level code called from NiFi custom processor code. I do not find complete success with this (in JUnit testing). It could be that I need to reassess what effect additivity has. That this not work very well is one reason I set out to corral logging in Apache NiFi.

To use logback configuration in JUnit testing, put the following, modified for what you want, into src/test/resources/logback-test.xml. This will supercede the production logback.xml configuration.

<configuration>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%-4r [%t] %-5p %c{3} - %m%n</pattern>

</encoder>

</appender>

<appender name="FILE" class="ch.qos.logback.core.FileAppender">

<file>./target/log</file>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%date %level [%thread] %logger{40} %msg%n</pattern>

</encoder>

</appender>

<logger name="org.apache.nifi" level="INFO" />

<logger name="org.apache.nifi.security.util.crypto" level="DEBUG" />

<root level="INFO">

<appender-ref ref="CONSOLE" />

</root>

</configuration>

I would like to ask a question about a custom processor using ComponentLog,

but then calling down into some other code that simple uses:

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Foo

{

private static final Logger logger = LoggerFactory.getLogger( Foo.class );

...

}

and then makes logging statements. Ostensibly this logging goes through

logback.xml and reaches /opt/nifi/logs/nifi-app.log doesn't it?

When a custom NiFi processor uses ComponentLog, but then calls down into other code that uses standard slf4j logging (like your Foo class example), that logging will indeed go through the standard logback configuration and reach /opt/nifi/logs/nifi-app.log.

Here's how the logging hierarchy works in this scenario:

The standard slf4j logging will be controlled by:

in your custom processor...

final ComponentLog processorLogger = getLogger(); processorLogger.info( "Processor starting..." ); // goes to NiFi UI + nifi-app.log // call into your business logic Foo.doSomething(); // this will log via standard slf4j

in Foo class...

private static final Logger logger = LoggerFactory.getLogger( Foo.class );

public static void doSomething()

{

logger.info( "Business logic executing..." ); // goes to nifi-app.log via logback.xml

}

Both log messages will appear in /opt/nifi/logs/nifi-app.log, but only the ComponentLog message will also appear in the NiFi UI.

This is actually a common and perfectly valid pattern in NiFi custom processors!

I know this works to some degree; I use this method all the time in other microservices. However, I need to be able to say here what works and what doesn't. So, a little more experimentation and I'll come back.

I'll add a checkmark to each thing I confirm with explanations and elaboration where needed.

* this will not be effective when, in production, Bulletin Level is "turned on". (DEBUG is the only "debug" option possible as TRACE isn't supported.